Distributed systems are everywhere. Due to the recent changes and developments in technology, distributed systems have become more popular. A distributed system is a collection of independent computers that appears to its users as a single coherent system. It is a collection of autonomous computers linked by a computer network and equipped with distributed system software. Distributed system software enables computers to coordinate their activities and to share resources of system like hardware, software and data also.

We define a distributed system as one in which hardware or software components located at networked computers communicate and coordinate their actions only by passing messages. It is a collection of independent computers that appears to its users as a single coherent system. A distributed system is a system with multiple components located on different machines that communicate and coordinate actions in order to appear as a single coherent to the end user. It is a system whose components are located on different networked computers, which communicate and coordinate their actions by passing messages to one another from any system. The components interact with one another in order to achieve common goal.

We can also define distributed system as “ A distributed system is a collection of separate and independent software and hardware components, called nodes that are networked and worked together coherently by coordinating and communicating through message passing or events, to fulfil one goal”. A distributed system is a collection of independent components located on different machines that share messages with each other in order to achieve common goal. As such, the distributed system will appear as if it is one interface or computer to the end user.

Examples of Distributed Systems:

There are numerous examples of distributed systems that are used in everyday life in a variety of applications. Examples of distributed systems are based on familiar and widely used computer networks: the Internet and the associated World Wide Web, Web Search, online gaming, email, social networks, e-Commerce etc. Here are a few examples of distributed systems:

The Internet:

The Internet is the most widely known example of a distributed system. The Internet facilitates the connection of many different computer systems in different geographical locations to share information and resources.

The internet is a very large distributed system. It enables users, wherever they are, to make use of services such as the World Wide Web, email and file transfer. Multimedia services are available in the internet, enabling users to access audio and video data including music, radio and TV channels and to hold phone and video conferences.

World Wide Web:

The World Wide Web (WWW) is a popular service running on the Internet. It allows documents in one computer to refer to textual or nontextual information stored in other computers. For example, a document in the United States may contain references to the photograph of a rainforest in Africa or a music recording in Australia. Such references are highlighted on the user’s monitor, and when selected by the user, the system fetches the item from a remote server using appropriate protocols and displays the picture or plays the music on the client machine.

The Internet and the WWW have changed the way we perform our daily activities or carry out business. For example, a large fraction of airline and hotel reservations are now done through the Internet. Shopping through the Internet has dramatically increased during the past few years. Millions of people now routinely trade stocks through the Internet. Music lovers download and exchange CD-quality music, pushing old-fashioned CD and DVD purchases to near obsolescence. Digital libraries provide users instant access to archival information from the comfort of their homes.

Social Networks:

Internet-mediated social interaction has witnessed a dramatic growth in recent times. Millions of users now use their desktop or laptop computers or smartphones to post and exchange messages, photos, and video clips with their buddies using these networking sites. Members of social networks can socialize by reading the profile pages of other members and contacting them.

Interactions among members often lead to the formation of virtual communities or clubs sharing common interests. The inputs from the members are handled by thousands of servers at one or more geographic locations, and these servers carry out specific tasks on behalf of the members—some deal with photos, some handle videos, some handle membership changes, etc. As membership grows, more servers are added to the pool, and powerful servers replace the slower ones.

Financial Trading:

As an example, we look at distributed systems support financial trading markets. The financial industry has long been at the cutting edge of distributed systems technology with its need, in particular, for real-time access to a wide range of information sources (for example, current share prices and trends, economic and political developments). The industry employs automated monitoring and trading applications.

Telecommunication Networks:

Telephone and Cellular networks are examples of distributed networks. Telephone networks have been around for over a century and it started as an early example of a peer to peer network. Cellular networks are distributed networks with base stations physically distributed in areas called cells. As telephone networks have evolved to VOIP (voice over IP), it continues to grow in complexity as a distributed network.

Real-Time Distributed Systems:

Many industries use real-time systems distributed in various areas, locally and globally. For example, airlines use real-time flight control systems, and manufacturing plants use automation control systems. Taxi companies also employ a dispatch system, and e-commerce and logistics companies typically use real-time package tracking systems when customers order a product.

In urban traffic control networks, the computers at the different control points control traffic signals to minimize traffic congestion and maximize traffic flow.

Distributed Database Systems:

A distributed database has locations across multiple servers, physical locations, or both. Users can replicate or duplicate the data across systems. Many popular applications use distributed databases and are aware of the heterogeneous or homogenous properties of the distributed database system. A heterogeneous distributed database allows for multiple data models and different database management. An end-user can use a gateway to translate the data between nodes, typically because of merging systems and applications.

A homogenous distributed database is a system that has the same database management system and data model. This is easier to scale performance and manage when adding new locations and nodes.

Sensor Networks:

The declining cost of hardware and the growth of wireless technology have led to new opportunities in the design of application-specific or special-purpose distributed systems. One such application is a sensor network. Each node is a miniature processor equipped with a few sensors that sense various environmental parameters, and is capable of wireless communication with other nodes. Such networks can be potentially used in a wide class of problems: These range from battlefield surveillance, biological and chemical attack detection, to healthcare, home automation, ecological, and habitat monitoring.

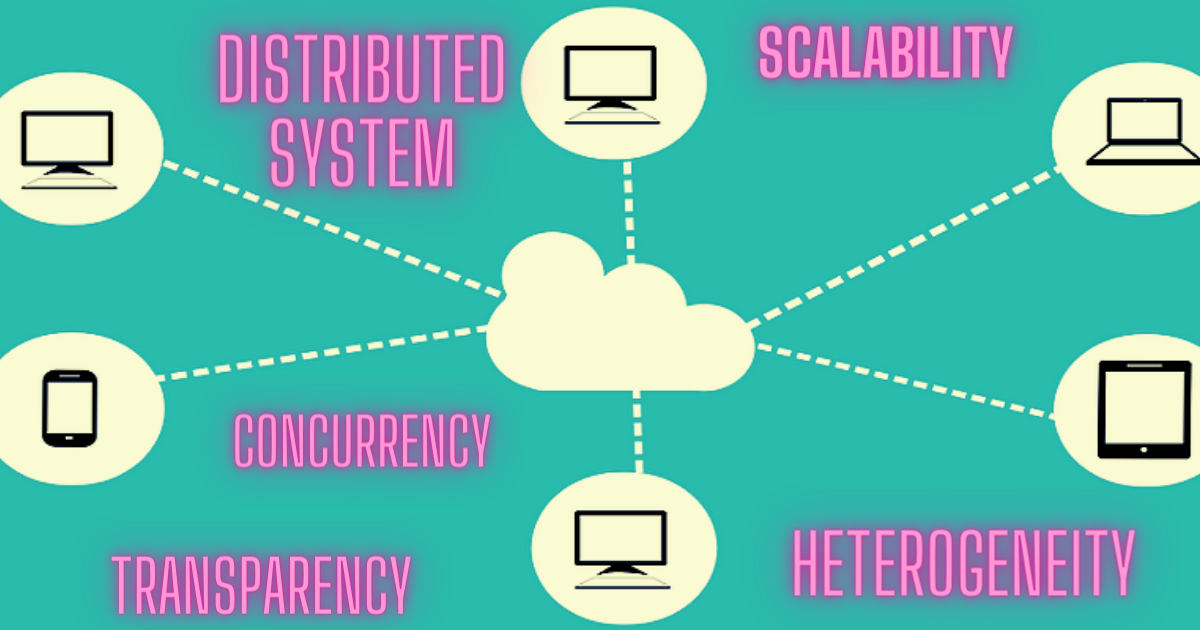

Characteristics of Distributed System (Features of Distributed System)

Resource Sharing: The main motivating factor for constructing and using distributed systems is resource sharing. Resources such as printers, files, web pages or database records are managed by servers of the appropriate type. For example, web servers manage web pages and other web resources. Resources are accessed by clients – for example, the clients of web servers are generally called browsers.

Openness: Openness is concerned with extension and improvement of distributed system. New components have to be integrated with existing components.

Concurrency- multiple activities are executed at the same time. Components in distributed systems are executed in concurrent processes. Integrity of the system may be violated if concurrent updates are not coordinated. Multiple components can execute concurrently, enabling parallel processing of tasks.

Scalability: In principle, distributed systems should also be relatively easy to expand or scale. We scale the distributed system by adding more computers in the network. Components should not need to be changed when increases scale of a system. Distributed systems can handle increased workload by adding more nodes to the network.

Fault Tolerance: This describes the system’s capability to tolerate faults, failures, and errors that occur in a system. The resistance, or resilience, can include tolerating data centres and servers. They are designed to continue operating even if some components fail.

Availability: This is the proportion of time in which a system can perform the necessary functions. Availability is a probability distribution that defines how likely a service is available for a specific time.

Reliability: Reliability allows a system to perform the functions a user requires under the conditions the user states for a specific period. It’s a continuity measure of correct service and a safety guarantee that nothing wrong can happen under the conditions.

Transparency: Transparency hides the complexity of distributed system to the users and application programs. Differences between the various computers and the ways in which they communicate are mostly hidden from users. The same holds for the internal organization of the distributed system. Ideally, users and applications interacting with a distributed system should perceive it as a single, coherent entity, hiding the complexities of its internal structure.

Heterogeneity: In distributed systems components can have variety and differences in networks, computer hardware, operating systems, Programming languages and implementations by developers. Components in a distributed system may be built using different technologies and may run on different hardware platforms, yet they must be able to communicate and work together seamlessly.

Advantages of Distributed System:

Distributed systems consist of autonomous computers that work together to give the appearance of a single coherent system. One important advantage is that they make it easier to integrate different applications running on different computers into a single system. Another advantage is that when properly designed, distributed systems scale well with respect to the size of the underlying network. Distributed systems allow a business to scale to accommodate more traffic.

Companies prefer their system decentralized and distributed, because Distributed System allows companies to have better customer services. There are many benefits of distributed system, including the following:

Resource Sharing: Allows systems to use each other’s resources

Scalability: Permits new systems to be added as members of the overall system. There aren’t any limits to how large a business can make its system, and it can always add more nodes when necessary.

Improved performance: Resource replication. Combined processing power of multiple computers provides much more processing power than a centralised system with multiple CPUs

Improved reliability and availability: Disruption would not stop the whole system from providing its services as resources spread across multiple computers.

Goal of Distributed System:

A distributed system should make resources easily accessible; it should reasonably hide the fact that resources are distributed across a network; it should be open; and it should be scalable.

Making resources accessible:

The main goal of a distributed system is to make it easy for the users (and applications) to access remote resources, and to share them in a controlled and efficient way. Resources can be just about anything, but typical examples include things like printers, computers, storage facilities, data, files, Web pages, and networks, to name just a few. There are many reasons for wanting to share resources. One obvious reason is that of economics. For example, it is cheaper to let a printer be shared by several users in a small office than having to buy and maintain a separate printer for each user. Likewise, it makes economic sense to share costly resources such as supercomputers, high-performance storage systems and other expensive peripherals.Connecting users and resources also makes it easier to collaborate and exchange information, as is clearly illustrated by the success of the Internet with its simple protocols for exchanging files, mail, documents, audio, and video.

Distribution Transparency:

An important goal of a distributed system is to hide the fact that its processes and resources are physically distributed across multiple computers. A distributed system that is able to present itself to users and applications as if it were only a single computer system is said to be transparent.

Types of Transparency:

Access transparency: Access transparency deals with hiding differences in data representation and the way that resources can be accessed by users. At a basic level, we wish to hide differences in machine architectures, but more important is that we reach agreement on how data is to be represented by different machines and operating systems.

Location transparency: Location transparency refers to the fact that users cannot tell where a resource is physically located in the system. Naming plays an important role in achieving location transparency. In particular, location transparency can be achieved by assigning only logical names to resources, that is, names in which the location of a resource is not secretly encoded.

Migration transparency: Distributed systems in which resources can be moved without affecting how those resources can be accessed are said to provide migration transparency.

Relocation transparency: Even stronger is the situation in which resources can be relocated while they are being accessed without the user or application noticing anything. In such cases, the system is said to support relocation transparency.

Replication transparency: Replication plays a very important role in distributed systems. For example, resources may be replicated to increase availability or to improve performance by placing a copy close to the place where it is accessed.

Replication transparency deals with hiding the fact that several copies of a resource exist. To hide replication from users, it is necessary that all replicas have the same name. Consequently, a system that supports replication transparency should generally support location transparency as well, because it would otherwise be impossible to refer to replicas at different locations.

Concurrency transparency: An important goal of distributed systems is to allow sharing of resources. In many cases, sharing resources is done in a cooperative way, as in the case of communication. However, there are also many examples of competitive sharing of resources. For example, two independent users may each have stored their files on the same file server or may be accessing the same tables in a shared database. In such cases, it is important that each user does not notice that the other is making use of the same resource. This phenomenon is called concurrency transparency.

Failure transparency: Making a distributed system failure transparent means that a user does not notice that a resource (he has possibly never heard of) fails to work properly, and that the system subsequently recovers from that failure.

Openness:

Another important goal of distributed systems is openness. An open distributed system is a system that offers services according to standard rules that describe the syntax and semantics of those services. For example, in computer networks, standard rules govern the format, contents, and meaning of messages sent and received.

Another important goal for an open distributed system is that it should be easy to configure the system out of different components (possibly from different developers). Also, it should be easy to add new components or replace existing ones without affecting those components that stay in place. In other words, an open distributed system should also be extensible. For example, in an extensible system, it should be relatively easy to add parts that run on a different operating system. or even to replace an entire file system. As many of us know from daily practice, attaining such flexibility is easier said than done.

Scalability:

For an application to scale, it must run without performance degradations as load increases. The simplest and quickest way to increase the application’s capacity to handle load is to scale up the machine hosting it.

Scalability of a system can be measured along at least three different dimensions (Neuman, 1994). First, a system can be scalable with respect to its size, meaning that we can easily add more users and resources to the system. Second, a geographically scalable system is one in which the users and resources may lie far apart. Third, a system can be administratively scalable, meaning that it can still be easy to manage even if it spans many independent administrative organizations.

Challenges of Distributed System:

The challenges arising from the construction of distributed systems are the heterogeneity of their components, openness (which allows components to be added or replaced), security, scalability – the ability to work well when the load or the number of users increases – failure handling, concurrency of components, transparency and providing quality of service.

Heterogeneity:

Distributed system must be constructed from a variety of different networks, operating systems, computer hardware and programming languages. The Internet communication protocols mask the difference in networks, and middleware can deal with the other differences.

The Internet enables users to access services and run applications over a heterogeneous collection of computers and networks. Although the Internet consists of many different sorts of network, their differences are masked by the fact that all of the computers attached to them use the Internet protocols to communicate with one another. Different programming languages use different representations for characters and data structures such as arrays and records. These differences must be addressed if programs written in different languages are to be able to communicate with one another. Programs written by different developers cannot communicate with one another unless they use common standards.

Heterogeneity refers to the diversity or variety of hardware, software, and network technologies that are interconnected to form the distributed system.

Hardware Heterogeneity:

- Distributed systems often involve nodes (computers or devices) with varying hardware configurations, such as different CPU architectures, memory capacities, storage devices, and network interfaces.

- Hardware heterogeneity can pose challenges in terms of resource management, load balancing, and ensuring compatibility and interoperability across diverse hardware platforms.

Software Heterogeneity:

- Nodes in a distributed system may run different operating systems, middleware, programming languages, libraries, and application software.

- Software heterogeneity introduces complexity in terms of software development, deployment, and maintenance, as well as interoperability issues between different software components and platforms.

Network Heterogeneity:

- Distributed systems span across networks with diverse characteristics, such as varying bandwidth, latency, reliability, and topology.

- Network heterogeneity affects communication patterns, data transmission efficiency, and overall system performance, particularly in wide-area distributed systems that operate over the internet or across multiple organizational networks.

Data Heterogeneity:

- Distributed systems often deal with heterogeneous data sources and formats, including structured and unstructured data, databases, file systems, and data streams.

- Data heterogeneity presents challenges in data integration, data consistency, data synchronization, and data interoperability across distributed data repositories.

Administrative Heterogeneity:

- Distributed systems may be managed and administered by different organizations, departments, or individuals, each with its own policies, procedures, security mechanisms, and administrative domains.

- Administrative heterogeneity introduces challenges related to security management, access control, authentication, authorization, and policy enforcement across heterogeneous administrative domains.

Middleware:

Complex distributed systems have significant degrees of heterogeneity. In order to support heterogeneous computers and networks while offering a single-system view, distributed systems are often organized by means of a layer of software-that is, logically placed between a higher-level layer consisting of users and applications, and a layer underneath consisting of operating systems and basic communication facilities.

The layer of software that simplifies the task of tying complex subsystems together or connecting software components is called middleware. It is an extension of the services offered by the operating system and is logically positioned between the application layer and the operating system layer of the individual machines as shown in Fig. below.

The term middleware applies to a software layer that provides a programming abstraction as well as masking the heterogeneity of the underlying networks, hardware, operating systems and programming languages.

The Common Object Request Broker (CORBA) is an example. Some middleware, such as Java Remote Method Invocation (RMI) supports only a single programming language. Most middleware is implemented over the Internet protocols, which themselves mask the differences of the underlying networks, but all middleware deals with the differences in operating systems and hardware.

In addition to solving the problems of heterogeneity, middleware provides a uniform computational model for use by the programmers of servers and distributed applications. Possible models include remote object invocation, remote event notification, remote SQL access and distributed transaction processing. For example, CORBA provides remote object invocation, which allows an object in a program running on one computer to invoke a method of an object in a program running on another computer. Its implementation hides the fact that messages are passed over a network in order to send the invocation request and its reply.

Heterogeneity and mobile code:

The term mobile code is used to refer to program code that can be transferred from one computer to another and run at the destination – Java applets are an example. Code suitable for running on one computer is not necessarily suitable for running on another because executable programs are normally specific both to the instruction set and to the host operating system. The virtual machine approach provides a way of making code executable on a variety of host computers: the compiler for a particular language generates code for a virtual machine instead of a particular hardware order code.

For example, the Java compiler produces code for a Java virtual machine, which executes it by interpretation. The Java virtual machine needs to be implemented once for each type of computer to enable Java programs to run. Today, the most commonly used form of mobile code is the inclusion Javascript programs in some web pages loaded into client browsers.

Openness:

Distributed systems should be extensible. The openness of a computer system is the characteristic that determines whether the system can be extended and re-implemented in various ways. The openness of distributed systems is determined primarily by the degree to which new resource-sharing services can be added and be made available for use by a variety of client programs.

Security:

Many of the information resources that are made available and maintained in distributed systems have a high intrinsic value to their users. Their security is therefore of considerable importance. Security for information resources has three components: confidentiality (protection against disclosure to unauthorized individuals), integrity (protection against alteration or corruption), and availability (protection against interference with the means to access the resources).

Encryption can be used to provide adequate protection of shared resources and to keep sensitive information secret when it is transmitted in messages over a network.

Although the Internet allows a program in one computer to communicate with a program in another computer irrespective of its location, security risks are associated with allowing free access to all of the resources in an intranet. Although a firewall can be used to form a barrier around an intranet, restricting the traffic that can enter and leave, this does not deal with ensuring the appropriate use of resources by users within an intranet, or with the appropriate use of resources in the Internet, that are not protected by firewalls.

Scalability:

A distributed system is scalable if the cost of adding a user is a constant amount in terms of the resources that must be added. Distributed systems operate effectively and efficiently at many different scales, ranging from a small intranet to the Internet. A system is described as scalable if it will remain effective when there is a significant increase in the number of resources and the number of users. The number of computers and servers in the Internet has increased dramatically.

Failure handling:

Computer systems sometimes fail. When faults occur in hardware or software, programs may produce incorrect results or may stop before they have completed the intended computation.

Failures in a distributed system are partial – that is, some components fail while others continue to function. Therefore the handling of failures is particularly difficult. The following techniques for dealing with failures are discussed here:

Detecting failures: Some failures can be detected. For example, checksums can be used to detect corrupted data in a message or a file. It is difficult or even impossible to detect some other failures, such as a remote crashed server in the Internet. The challenge is to manage in the presence of failures that cannot be detected but may be suspected.

Masking failures: Some failures that have been detected can be hidden or made less severe. Two examples of hiding failures:

1. Messages can be retransmitted when they fail to arrive.

2. File data can be written to a pair of disks so that if one is corrupted, the other may still be correct.

Tolerating failures: Most of the services in the Internet do exhibit failures – it would not be practical for them to attempt to detect and hide all of the failures that might occur in such a large network with so many components. Their clients can be designed to tolerate failures, which generally involves the users tolerating them as well.

Recovery from failures: Recovery involves the design of software so that the state of permanent data can be recovered or ‘rolled back’ after a server has crashed. In general, the computations performed by some programs will be incomplete when a fault occurs, and the permanent data that they update (files and other material stored in permanent storage) may not be in a consistent state.

Concurrency:

The presence of multiple users in a distributed system is a source of concurrent requests to its resources. Both services and applications provide resources that can be shared by clients in a distributed system. There is therefore a possibility that several clients will attempt to access a shared resource at the same time.

For example, a data structure that records bids for an auction may be accessed very frequently when it gets close to the deadline time. The process that manages a shared resource could take one client request at a time. But that approach limits throughput. Therefore services and applications generally allow multiple client requests to be processed concurrently.

Transparency:

Transparency is defined as the concealment from the user and the application programmer of the separation of components in a distributed system, so that the system is perceived as a whole rather than as a collection of independent components. The implications of transparency are a major influence on the design of the system software.

The aim is to make certain aspects of distribution invisible to the application programmer so that they need only be concerned with the design of their particular application. For example, they need not be concerned with its location or the details of how its operations are accessed by other components, or whether it will be replicated or migrated. Even failures of networks and processes can be presented to application programmers in the form of exceptions – but they must be handled.

Access transparency enables local and remote resources to be accessed using identical operations.

Location transparency enables resources to be accessed without knowledge of their physical or network location (for example, which building or IP address).

Concurrency transparency enables several processes to operate concurrently using shared resources without interference between them.

Replication transparency enables multiple instances of resources to be used to increase reliability and performance without knowledge of the replicas by users or application programmers.

Failure transparency enables the concealment of faults, allowing users and application programs to complete their tasks despite the failure of hardware or software components.

Mobility transparency allows the movement of resources and clients within a system without affecting the operation of users or programs. Performance transparency allows the system to be reconfigured to improve performance as loads vary.

Scaling transparency allows the system and applications to expand in scale without change to the system structure or the application algorithms.

Reasons for Building Distributed Systems:

Over the past years, distributed systems have gained substantial importance. The reasons of their growing importance are explained below:

Geographically Distributed Environment: First, in many situations, the computing environment itself is geographically distributed. As an example, consider a banking network. Each bank is supposed to maintain the accounts of its customers. In addition, banks communicate with one another to monitor interbank transactions or record fund transfers from geographically dispersed automated teller machines (ATMs). Another common example of a geographically distributed computing environment is the Internet, which has deeply influenced our way of life. The mobility of the users has added a new dimension to the geographic distribution.

Speed Up: Second, there is the need for speeding up computation. The speed of computation in traditional uniprocessors is fast approaching the physical limit. While multicore, superscalar, and very large instruction word (VLIW) processors stretch the limit by introducing parallelism at the architectural level, the techniques do not scale well beyond a certain level.

An alternative technique of deriving more computational power is to use multiple processors. Dividing a total problem into smaller subproblems and assigning these subproblems to separate physical processors that can operate concurrently are potentially an attractive method of enhancing the speed of computation. Moreover, this approach promotes better scalability, where the users or administrators can incrementally increase the computational power by purchasing additional processing elements or resources. This concept is extensively used by the social networking sites for the concurrent upload and download of the photos and videos of millions of customers.

Resource Sharing: Third, there is a need for resource sharing. Here, the term resource represents both hardware and software resources. The user of computer A may want to use a fancy laser printer connected with computer B, or the user of computer B may need some extra disk space available with computer C for storing a large file.

In a network of workstations, workstation A may want to use the idle computing powers of workstations B and C to enhance the speed of a particular computation. Through Google Docs, Google lets us share their software for word processing, spreadsheet application, and presentation creation without anything else on our machine. Cloud computing essentially outsources the computing infrastructure of a user or an organization to data centers—these centers allow thousands of their clients to share their computing resources through the Internet for efficient computing at an affordable cost.

Fault Tolerance: Fourth, powerful uniprocessors or computing systems built around a single central node are prone to a complete collapse when the processor fails. Many users consider this to be risky. Distributed systems have the potential to remedy this by using appropriate fault tolerance techniques—when a fraction of the processors fail, the remaining processes take over the tasks of the failed processors and keeps the application running.

In many fault tolerant distributed systems, processors routinely check one another at predefined intervals, allowing for automatic failure detection, diagnosis, and eventual recovery. A distributed system thus provides an excellent opportunity for incorporating fault tolerance and graceful degradation.

Distributed System Models:

Distributed System Models is also called Distributed System Design. The common properties and design issues for distributed system are explained in distributed system models.

Systems that are intended for use in real-world environments should be designed to function correctly in the widest possible range of circumstances and in the face of many possible difficulties and threats. Distributed systems of different types share important underlying properties and give rise to common design problems.

Each type of model is intended to provide an abstract, simplified but consistent description of a relevant aspect of distributed system design:

Distributed System Model is divided into two types:

1. Architectural model

2. Fundamental Model

Architectural Models:

Architectural Model deals with organization of components and their interrelationships. The architecture of a system is its structure in terms of separately specified components and their interrelationships.

An architectural model of a distributed system defines the way in which the components of the system interact with each other and the way in which they are mapped onto an underlying network of computers.

An architectural model of a distributed system is concerned with the placement of its components and the relationship between them. The overall goal of architectural model is to ensure that the structure will meet present and likely future demands on it.

Major concerns are to make the system reliable, manageable, adaptable and cost-effective. The architectural design of a building has similar aspects – it determines not only its appearance but also its general structure and architectural style and provides a consistent frame of reference for the design.

Architectural models describe a system in terms of the computational and communication tasks performed by its computational elements; the computational elements being individual computers or aggregates of them supported by appropriate network interconnections.

The two most commonly used forms of Architectural Model for distributed systems are:

· Client-Server Model

· Peer-to-Peer Model

Client-Server Model:

The client–server model is a widely accepted model for designing distributed systems. In client server model, client processes interact with individual server processes in separate host computers in order to access the shared resources that they manage. Client processes request for service, and server processes provide the desired service. A client sends a request to a server, who will then produce a result that is returned to the client.

Servers may in turn be clients of other servers. For example, a web server is often a client of a local file server that manages the files in which the web pages are stored.

A simple example of client–server communication is the Domain Name Service (DNS)—clients request for network addresses of Internet domain names, and DNS returns the addresses to the clients. Another example is that of a search engine like Google. When a client submits a query about a document, the search engine looks up its servers and returns pointers to the web pages that can possibly contain information about that document.

Peer-to-Peer Model:

In this architecture all of the processes involved in a task or activity play similar roles, interacting cooperatively as peers without any distinction between client and server processes or the computers on which they run. In practical terms, all participating processes run the same program and offer the same set of interfaces to each other.

Fundamental Models:

Fundamental models are based on some fundamental properties such as performance, reliability and security. All of the models share the design requirements of achieving the performance and reliability characteristics of processes and networks and ensuring the security of the resources in the system.

Fundamental Models are concerned with a formal description of the properties that are common in all of the architectural models.

Fundamental models based on the fundamental properties that allow us to be more specific about their characteristics and the failures and security risks they might exhibit.

Fundamental model addressed by three models:

- Interaction Model

- Failure Model

- Security Model

Interaction Model:

The interaction model is concerned with the performance of processes and communication channels and the absence of a global clock.

Computation occurs within processes; the processes interact by passing messages, resulting in communication (information flow) and coordination (synchronization and ordering of activities) between processes. Interacting processes are affected by two significant factors:

· Performance of communication channels

· Computer clocks and timing events

Performance of communication channels:

Communication over a computer network has the following performance characteristics relating to latency, bandwidth and jitter.

Latency: The delay between the sending of a message by one process and its receipt by another is referred to as latency.

Bandwidth: The bandwidth of a computer network is the total amount of information that can be transmitted over it in a given time.

Jitter: Jitter is the variation in the time taken to deliver a series of messages.

Computer clocks and timing events:

Each computer in a distributed system has its own internal clock, which can be used by local processes to obtain the value of the current time. Therefore two processes running on different computers can each associate timestamps with their events. However, even if the two processes read their clocks at the same time, their local clocks may supply different time values. This is because computer clocks drift from perfect time and, more importantly, their drift rates differ from one another.

The term clock drift rate refers to the rate at which a computer clock deviates from a perfect reference clock. Even if the clocks on all the computers in a distributed system are set to the same time initially, their clocks will eventually vary quite significantly unless corrections are applied.

Two variants of the Interaction Model:

Two variants of the Interaction model are the Synchronous distributed system and the Asynchronous distributed system models.

Synchronous distributed systems are defined to be systems in which: the time to execute each step of a process has a known lower and upper bound; each transmitted message is received within a known bounded time; each process has a local clock whose drift rate from real time has a known bound. It is difficult to arrive at realistic values and to provide guarantees of the chosen values.

Asynchronous distributed systems have no bounds on process execution speeds, message transmission delays and clock drift rates. This exactly models the Internet, in which there is no intrinsic bound on server or network load and therefore on how long it takes, fro example, to transfer a file using FTP. Actual distributed systems tend to be asynchronous in nature.

Failure Model:

The failure model attempts to give a precise specification of the faults that can be exhibited by processes and communication channels. It defines reliable communication and correct processes.

In a distributed system both processes and communication channels may fail. The failure model defines the ways in which failure may occur in order to provide an understanding of the effects of failures.

There are 3 categories of failures:

- Omission Failures,

- Byzantine (or arbitrary) failures

- Timing Failures

Omission Failures:

These refer to cases when a process or communication channel fails to perform actions that it is supposed to do.

Process Omission Failures: The main omission failure of a process is to crash, i.e., the process has halted and it will not execute any more. Other processes may be able to detect such a crash by the fact that the process repeatedly fails to respond to invocation messages. A process crash is detected via timeouts. – that is, a method in which one process allows a fixed period of time for something to occur. I n an asynchronous system a timeout can indicate only that a process is not responding – it may have crashed or may be slow, or the messages may not have arrived.

A process crash is called fail-stop if other processes can detect certainly that the process has crashed. Fail-stop behaviour can be produced in a synchronous system if the processes use timeouts to detect when other processes fail to respond and messages are guaranteed to be delivered.

Communication Omission Failures:

1) Send-Omission Failure: The loss of messages between the sending process and the outgoing message buffer.

2) Receive-Omission Failure: The loss of messages between the incoming message buffer and the receiving process.

3) Channel Omission Failure: The loss of messages in between, i.e. between the outgoing buffer and the incoming buffer.

Byzantine or Arbitrary Failures:

The term Arbitrary or Byzantine failure is used to describe the worst possible failure semantics, in which any type of error may occur. For example, a process may set wrong values in its data items, or it may return a wrong value in response to an invocation.

An arbitrary failure of a process is one in which it arbitrarily omits intended processing steps or takes unintended processing steps. Arbitrary failures in processes cannot be detected by seeing whether the process responds to invocations, because it might arbitrarily omit to reply.

Communication channels can suffer from arbitrary failures; for example, message contents may be corrupted, nonexistent messages may be delivered or real messages may be delivered more than once. Arbitrary failures of communication channels are rare because the communication software is able to recognize them and reject the faulty messages. For example, checksums are used to detect corrupted messages, and message sequence numbers can be used to detect nonexistent and duplicated messages.

Timing Failures:

Timing failures are applicable in synchronous distributed systems where time limits are set on process execution time, message delivery time and clock drift rate. In an asynchronous distributed system, an overloaded server may respond too slowly, but we cannot say that it has a timing failure since no guarantee has been offered.

Security Model:

The security model discusses the possible threats to processes and communication channels. It introduces the concept of a secure channel, which is secure against those threats. Some of those threats relate to integrity: malicious users may tamper with messages or replay them. Others threaten their privacy. Another security issue is the authentication of the principal (user or server) on whose behalf a message was sent. Secure channels use cryptographic techniques to ensure the integrity and privacy of messages and to authenticate pairs of communicating principals.

The security of a distributed system can be achieved by securing the processes and the channels used for their interactions and by protecting the objects (e.g. web pages, databases etc) that they encapsulate against unauthorized access. Threats to processes (like server or client processes) include not being able to reliably determine the identity of the sender.

Threats to communication channels include copying, altering, or injecting messages as they traverse the network and its routers. This presents a threat to the privacy and integrity of information. Another form of attack is saving copies of the message and to replay it at a later time, making it possible to reuse the message over and over again (e.g. remove a sum from a bank account). Encryption of messages and authentication using digital signatures is used to defeat security threats.

Variations of Client Server Architecture:

· Proxy server

· Thin Client

· Mobile Agent

Proxy Server:

Proxy Server refers to a server that acts as an intermediary between client and server. The proxy server is a computer on the internet that accepts the incoming requests from the client and forwards those requests to the destination server. It has its own IP address. It separates the client program and web server from the global network.

The purpose of proxy servers is to increase the availability and performance of the service by reducing the load on the wide area network and web servers. Proxy servers can take on other roles; for example, they may be used to access remote web servers through a firewall.

Thin Client:

A thin client is a computer that runs from resources stored on a central server instead of a localized hard drive. It is virtual desktop computing model that runs on the resources stored on a central server.

Thin clients are small, silent devices that communicate with a central server giving a computing experience that is largely identical to that of a PC.

Mobile Agent:

Mobile Agents are transportable programs that can move over the network and act on behalf of the user. A mobile agent is a running program (including both code and data) that travels from one computer to another in a network carrying out a task on someone’s behalf, such as collecting information, and eventually returning with the results. A mobile agent may make many invocations to local resources at each site it visits – for example, accessing individual database entries.

A mobile agent is a program code that migrates from one machine to another. The code, which is an executable program, is called a script. In addition, agents carry data values or procedure arguments that need to be transported across machines. The agent code is executed at the host machine where the agent can interact with the variables of programs running on the host machine, use its resources, and take autonomous routing decisions. During migration, the process in execution transports its state from one machine to another while keeping its data intact.

Agent migration is possible via the following steps:

1. The sender writes the script and calls a procedure submits with the parameters (name, parameters, target). The state of the agent and the parameters are marshaled and the agent is sent to the target machine. The sender either blocks itself or continues with an unrelated activity at its own site.

2. The agent reaches the destination, where an agent server handles it. The server authenticates the agent, unmarshals its parameters, creates a separate process or thread, and schedules it for the execution of the agent script.

3. When the script completes its execution, the server terminates the agent process, marshals the state of the agent as well as the results of the computation if any, and forwards it to the next destination, which can be a new machine, or the sender. The choice of the next destination follows from the script.

Mobile agents might be used to install and maintain software on the computers within an organization or to compare the prices of products from a number of vendors by visiting each vendor’s site and performing a series of database operations.

Mobile agents (like mobile code) are a potential security threat to the resources in computers that they visit. The environment receiving a mobile agent should decide which of the local resources it should be allowed to use, based on the identity of the user on whose behalf the agent is acting – their identity must be included in a secure way with the code and data of the mobile agent.

In addition, mobile agents can themselves be vulnerable – they may not be able to complete their task if they are refused access to the information they need. The tasks performed by mobile agents can be performed by other means. For example, web crawlers that need to access resources at web servers throughout the Internet work quite successfully by making remote invocations to server processes. For these reasons, the applicability of mobile agents may be limited.